In the past three weeks, media coverage of the artificial intelligence sector has turned pessimistic. Outlets like Bloomberg, The Information, and TechCrunch have all documented challenges at leading model providers like OpenAI, Anthropic, and Google. The problem? Large language models (LLMs) are no longer improving at the same rate of the late 2010s / early 2020s.

For some context, the LLMs that have captivated attention in the past two years (GPT, Claude, Gemini, etc.) have been able to deliver immense progress thanks to scaling laws. Instead of meticulous model molding or new theorem discovery - relatively speaking - AI researchers saw the most progress from simply increasing the amount of data and compute used in model training. Increasing the amount of training data and number of GPUs reliably improved results. This relationship was documented formally back in 2020.

Think of LLMs and scaling laws like a lumberjack with an axe. For a while, all they needed for improvement was a bigger, sharper axe.

Unfortunately, as the aforementioned articles document, the scaling laws that have worked to date are starting to run dry. It’s not that models can no longer improve. Rather, model improvements from scaling aren’t the revolutionary breakthroughs that the industry seems to have gotten used to. From Bloomberg:

“After years of pushing out increasingly sophisticated AI products at a breakneck pace, three of the leading AI companies are now seeing diminishing returns from their costly efforts to build newer models. At Alphabet Inc.’s Google, an upcoming iteration of its Gemini software is not living up to internal expectations, according to three people with knowledge of the matter. Anthropic, meanwhile, has seen the timetable slip for the release of its long-awaited Claude model called 3.5 Opus.

Partially victims of their own success, AI companies have signed up for (i) lots of progress on (ii) a very ambitious timetable. None of this is to say that AI progress is done for. There are several reasons to hope for progress:

Elon Musk’s xAI is ramping up a cluster of GPUs called Colossus. 100K centrally organized chips at a facility in Memphis could reach new levels of performance.

Sadly this is not on site at the Bass Pro Shops Pyramid

“Test-time compute”, which translates to giving models extra time / resources to work with, is being cited by experts & investors as a new catalyst for model improvement.

On the interface side, many people believe that applications reliant upon big LLMs have not scratched the surface for optimization and usability.

Whether or not scaling laws hold, there’s still runway for innovation. But the promises of all powerful models and AGI seem a bit further afield without the traditional tailwind of scaling laws. The current trajectory is starting to chart into the unknown.

Calling all Agents

In 2024, but especially within the past three months, a new AI buzzword has taken center stage for product strategy. The AI agent. Or, for even more bonus points, agentic.

In simplified terms, an agent uses AI to link and automate a series of tasks. Compared to traditional software, AI agents have more control to stitch together steps in a process. For example, this Menlo Ventures writeup documented how a formally manual PDF scan can be decomposed into smaller tasks, all of which are outsource-able to an AI agent.

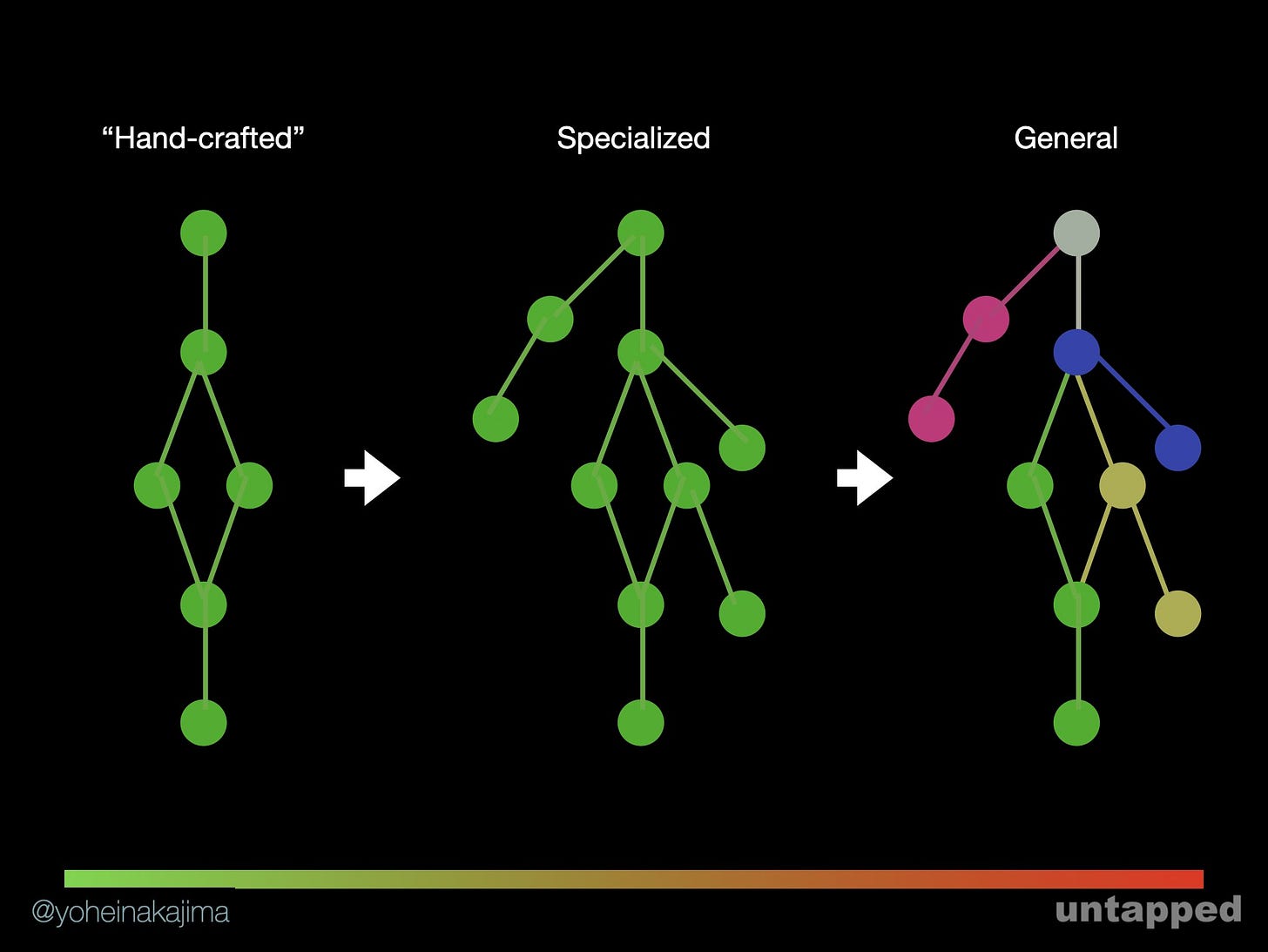

As the market sub-segment grows, the exact definition of AI agent is still murky. Is there only one type of agent? Or is there a spectrum to apply the term? For example, here’s a Twitter thread that imagines tranches of complexity, agent scoping, and model quality:

While AI providers deliberate agent types and exact terminology, the wave of new AI agent offerings marches on regardless. Tech companies big and small are invoking the term to help generate AI revenue. Or, at the very least, invoking the term to convince investors that they’ll generate AI revenue someday soon. For example, let’s take a look at recent keynotes from Microsoft and Salesforce:

Meanwhile, on the startup side, “agent” is being used to signal functionality and attract funding. Look at YC’s recent AI listing and do a scan for “agent”. ~50 results, all marketing themselves with the hot term of the season.

On one hand, the agent terminology seems like a more grounded way for consumers and technologists to interact with artificial intelligence. People no longer have to fit every facet of the economy into a chatbot shaped hole. Sometimes a customer complaint just needs to get routed and served.

On the other hand, “agent” just has a little less pizzazz. At what point are people once again using software, with some AI features in the background? Does such a pragmatic tech stack support double-digit revenue multiples and company names that end in “.ai”?

The agent trend isn’t just an evolution in artificial intelligence features or design. It’s an evolution in expectations.

Presidential Priorities

Dwight David Eisenhower had many roles. 34th President of the United States, five-star general, Supreme Allied Commander of NATO, interstate highway enthusiast, and most notably, Ike.

A newer role that Eisenhower posthumously achieved is productivity guru. As one of the most accomplished Americans of the 20th century, any tactic Eisenhower employed for success is worth studying and evangelizing. And the one with the most enduring appeal is the Eisenhower Decision Matrix.

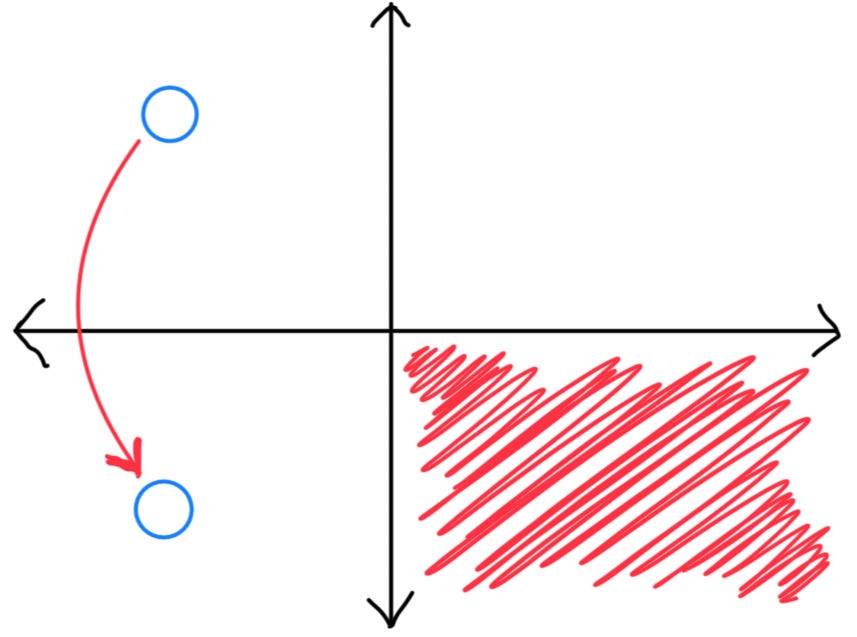

Eisenhower segmented all of the work he had to do with two questions - importance and urgency. The criteria combine for a 2x2 with four quadrants of work:

The 7 Habits of Highly Effective People, published at the turn of the century, helped popularize Eisenhower’s productivity bona fides. The timing was right, as Eisenhower’s system lends itself well to the internet era.

Back in the 1980s, IBM introduced an internal email system for its employees. It took all of one week for the system’s mainframe to be overwhelmed. Prior to the system IBM had written memos, calls, and in-person meetings. The initial email volume wasn’t an unlock in business productivity. It was employees making volume simply because they could.

In the 40 years since IBM’s email launch, computer mainframes have more than caught up. The worker reading them, however, hasn’t kept pace. Eisenhower’s Decision Matrix resonated with the masses because it concisely called out the proliferation of busywork. In the pie chart of total employee hours, an increasingly large piece was taken up by tasks pretty firmly in the “Delegate” corner. For roles that can hire interns, great news!

What about everyone else?

Re-Casting AI

Earlier this month, Ben Affleck made a rare sans-Dunkin public appearance to talk about the media business with CNBC. Like all of media panels, the discussion inevitably turned to AI:

“craftsman is knowing how to work. Art is knowing when to stop. I think knowing when to stop is going to be a very difficult thing for AI to learn because it’s taste. And also lack of consistency, lack of controls, lack of quality. AI, for this world of generative video, is going to do key things more — I wouldn’t like to be in the visual effects business. They are in trouble. Because what costs a lot of money is now going to cost a lot less, and it’s going to hammer that space, and it already is. And maybe it shouldn’t take a thousand people to render something. But it’s not going to replace human beings making films.”

Affleck’s analysis of the entertainment business features surprisingly rare pragmatism. Yes, these tools are capable and will have significant ramifications for all types of jobs. But capable does not mean smart. And most importantly, capability is not the same as humanity.

The interview also does a good job discussing two fundamental questions that AI evangelists sometimes seem to ignore:

Practically: will artificial intelligence advance to the point that it can do all of the hype-worthy things being promised? (artificial general intelligence / AGI, digital god, etc.)

Philosophically: what sort of things should humanity want artificial intelligence products to do?

Or, as Jeff Goldblum asks in Jurassic Park, “Your scientists were so preoccupied with whether or not they could, they didn't stop to think if they should.”

The doubt surrounding scaling laws has brought the answer to practical question 1 back down to earth. Essentially “maybe, but probably not so soon, we’ll see”. Oddly, the weakening of scaling laws has allowed for a better answer to question 2. Technology is supposed to help people focus on their most important work, not take it over. Whether that’s directing a movie like Affleck, providing healthcare to a patient, or talking with a customer to understand them (and not robotically treating them like cattle), humans have important and urgent jobs to be done.

But fear not for the AI agents! They can and should have a quadrant of Eisenhower’s Decision Matrix all to themselves. The matrix isn’t just a good litmus test on work that can be done by machines. It’s a definition of the work that can and should only be done by humans.

There’s so much work to delegate. So many PDFs to convert into Excels to convert back to PDFs. The AI sector, nice and full from the slice of scaling laws humble pie, must take command of the agent wave to make products people actually want.

Reading List

Scaling realities: makes a good point that scaling still works, even if slightly slower than in the past.

Also recommend Benedict Evans’ end of year wrap deck, AI eats the world, which looks ahead nicely.

Against the Rules with Michael Lewis: latest season on sports fandom & betting.

The Old Man and the Sun: AT&T Stadium’s sun-k costs (no apologies on that one)

“Jerry Jones is the closest thing we have to an old-timey king in America. He has secured absolute power, millions of followers, and hereditary succession. And you know what kings really love doing? Making arbitrary requests about the massive construction projects they commissioned. (Seriously—pretty recurring theme throughout history.) And he’s insisting that an obvious, fixable problem is actually fine, can’t be changed in any way, and is in fact part of his plan.”

You can call me AI